Blog Post

AI: the best invention since sliced bread or the doomsday of humanity?

The pace of change will never be this slow again.

Artificial intelligence for text and image analytics has been around for years, and listening247 has been carrying out R&D for the social intelligence use case since 2012. Strangely, we haven’t yet published our view on the impact that AI will have on humanity in the near to medium term future.

Time to rectify this!

What exactly is AI?

Just like every other buzzword, it means different things to different people. Is there a simple definition of AI that everyone can understand?

You bet!

AI can be classified as weak or strong AI.

Weak AI:

The majority of current AI use cases - such as social intelligence using text analytics (NLP) - fall under weak AI. It usually involves supervised machine learning, though we are increasingly seeing use cases where semi- or unsupervised machine learning is being used.

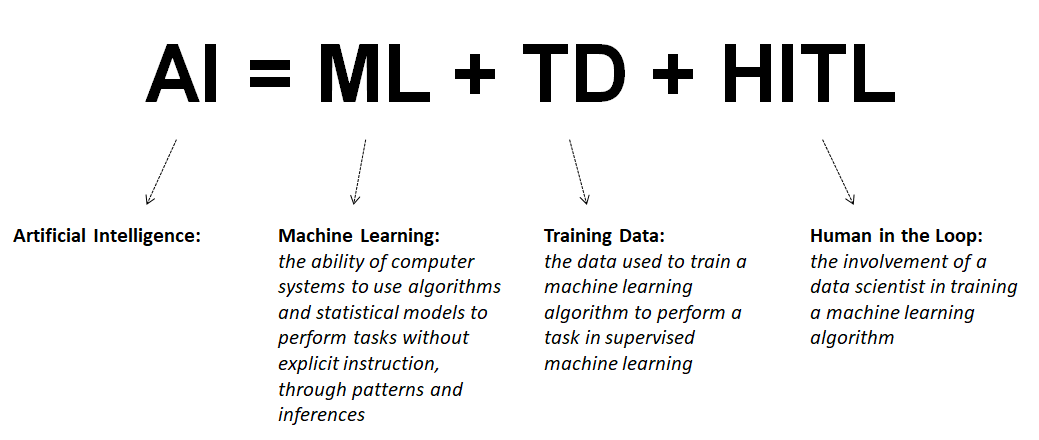

For the time being, let’s define (weak) AI with this simple formula:

Strong AI:

Strong, full or general AI is something different. For most people it is defined by Alan Turing’s test whereby according to Wikipedia “A machine and a human both converse sight unseen with a second human, who must evaluate which of the two is the machine, which passes the test if it can fool the evaluator a significant fraction of the time”. The optimists among pundits claim it will be with us in 10-15 years from now, the pessimists say by 2100.

Some people talk about machines with consciousness. To paraphrase the well known author Yuval Noah Harari: a taxi driver needs to only take us from A to B, we are not interested in how he feels about the latest Trump news or the sunset; thus an autonomous car has a good enough AI to do the job without needing to feel or having a consciousness. The same applies to so many other aspects of work and life.

Will machines ever be able to feel? Is that necessary for strong AI to exist?

AI’s impact on humanity:

“Two billion people will be unemployed by 2050.”

“Humanity is in danger of being taken over by machines”

“This could spell the end of the human race” said the late Stephen Hawking. Elon Musk and Bill Gates are also often quoted expressing a similar opinion.

The flipside of the coin is that humanity should choose to see a positive version of the future, and then strive to make it happen. Rather than worrying about unemployment we should be looking forward to spending more time on the beach, pursuing our passions and hobbies to perfection.

I dream of days philosophising in a circle of close friends (also fellow philosophers) about the meaning of life… and not just human life as this has been covered by Plato, Aristotle and others; maybe we will be focussing on the lives of robots who can fall in love, or superhumans with chips in their brains that are “a-mortal” - as opposed to immortal (stuff for another blog post).

Doing market research using AI is a close second to philosophising.

Universal Basic Income (UBI):

The best idea floating around when it comes to managing unemployment brought on by the impact of AI is the UBI. Having said that, being the best idea so far does not necessarily make it a great idea. Too many people with some means and lots of time on their hands may ultimately become a curse for humanity. Possible outcomes include:

- Boredom to death - people may literally commit suicide due to not having a good enough reason to get out of bed every day

- - Resentment towards AI or the rich (or both):

- 1. Criminality may rise

- 2. Terrorism incidents may become more frequent

- 3. A movement could start against corporations - a revolution

- Increase in radical religious movements - as people will have more free time

- A couple of rather positive ones:

- 1. Renaissance of the arts

- 2. The return of full time philosophers (i.e. my friends and I)

Ethical and Legal Framework:

We need new laws and we need to figure out what moral compass we want to ingrain in the autonomous machines of the future - if that is at all possible.

We want to ideally avoid scenarios described in science fiction films such as Ex Machina and I, Robot.

As per Isaac Asimov’s laws about robots from the previous century:

- 1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- 2. A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

- 3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

At a first glance they seem reasonable; he certainly thought that if these principles were to be applied, humanity would be safe from destruction by AI powered machines. Apparently he is wrong.

What we will need is machine ethics for superintelligence, and the laws or principles cannot be chauvinistic as Asimov’s are. Humans cannot realistically expect to be the boss of machines that are a million times smarter than them.

The Singularity Moment:

Ray Kurzweil the author of ‘The Singularity Is Near: When Humans Transcend Biology’ predicts that the singularity moment will happen around 2045. Ray said in his book:

“I regard someone who understands the Singularity and who has reflected on its implications for his or her own life as a ‘singularitarian’.”

I guess thinking about and writing this article - I will not claim I understand the Singularity - may make me a singularitarian! What do you think?

There is an impressive and humbling definition of this moment that I heard from a fellow YPO member, who among other things is the Executive Director of Singularity University Germany, Nikolaus Weil, at a CEO (organisation) recruitment event:

I will paraphrase but the gist is: “It is the moment in time at which machines will acquire the same knowledge and capability as the whole of humanity and in the next 5 minutes by continually improving themselves will become millions of times smarter than humans”.

AI = Augmented Intelligence:

Some people like to challenge the notion of artificial intelligence so they decided to give the acronym AI a new interpretation namely “Augmented Intelligence”.

Vernor Vinge came up with these 4 options of the singularity manifestation:

- 1. The development of computers that are "awake" and superhumanly intelligent.

- 2. Large computer networks (and their associated users) may "wake up" as a superhumanly intelligent entity.

- 3. Computer/human interfaces may become so intimate that users may reasonably be considered superhumanly intelligent.

- 4. Biological science may find ways to improve upon the natural human intellect.[9]

Options 3 and 4 may involve the augmentation of human intelligence to a superintelligence.

The problem with these two options is that only super rich people will be able to afford them; thus ending up with some serious inequality issues between new castes or should we say new breeds of humans.

I think this article is running away from me. It feels like one thing leads to another, one thought to the next; there is no end and I feel like getting a beer,so I will end it - abruptly - here.

My fitbit is asking me to start preparing to go to bed……futurism is a very tiresome business!